How to Optimize WordPress Robots.txt for SEO: The Evergreen Guide

In the world of Technical SEO, if Google Search Console is your diagnostic dashboard and Google Analytics 4 (GA4) is your performance engine, then Robots.txt is your website’s traffic controller. It quietly decides how search engine crawlers interact with your site and which areas deserve priority.

Often misunderstood as a security tool, the Robots.txt file is actually directs bots, manages your crawl budget and ensures that Google sees exactly what you want it to see. In this guide, we will break down everything you need to know about optimizing your WordPress Robots.txt for maximum performance.

Although it looks like a simple text file, a poorly configured Robots.txt can silently harm your rankings, waste crawl budget or even delay Google AdSense approval. On the other hand, a clean and optimized Robots.txt helps search engines focus on your most valuable content while ignoring unnecessary system pages.

This evergreen guide explains what Robots.txt is, why it matters for SEO, how to optimize it for WordPress, and how to avoid the most common mistakes — all in a practical, beginner‑friendly way.

- How to Optimize WordPress Robots.txt for SEO: The Evergreen Guide

- What Is Robots.txt and Why Does It Matter?

- Robots.txt vs Noindex: Important Clarification

- The Ideal Robots.txt File for WordPress

- How to Edit Robots.txt in WordPress (Step‑by‑Step)

- Common Robots.txt Mistakes That Hurt SEO

- How to Test Your Robots.txt File

- Frequently Asked Questions (FAQ)

- Conclusion: Build a Crawl‑Friendly, Authority Website

What Is Robots.txt and Why Does It Matter?

Robots.txt is a plain text file located in the root directory of your website:

https://yourwebsite.com/robots.txt

It follows the Robots Exclusion Protocol (REP) and tells search engine bots which parts of your website they are allowed to crawl and which parts they should avoid.

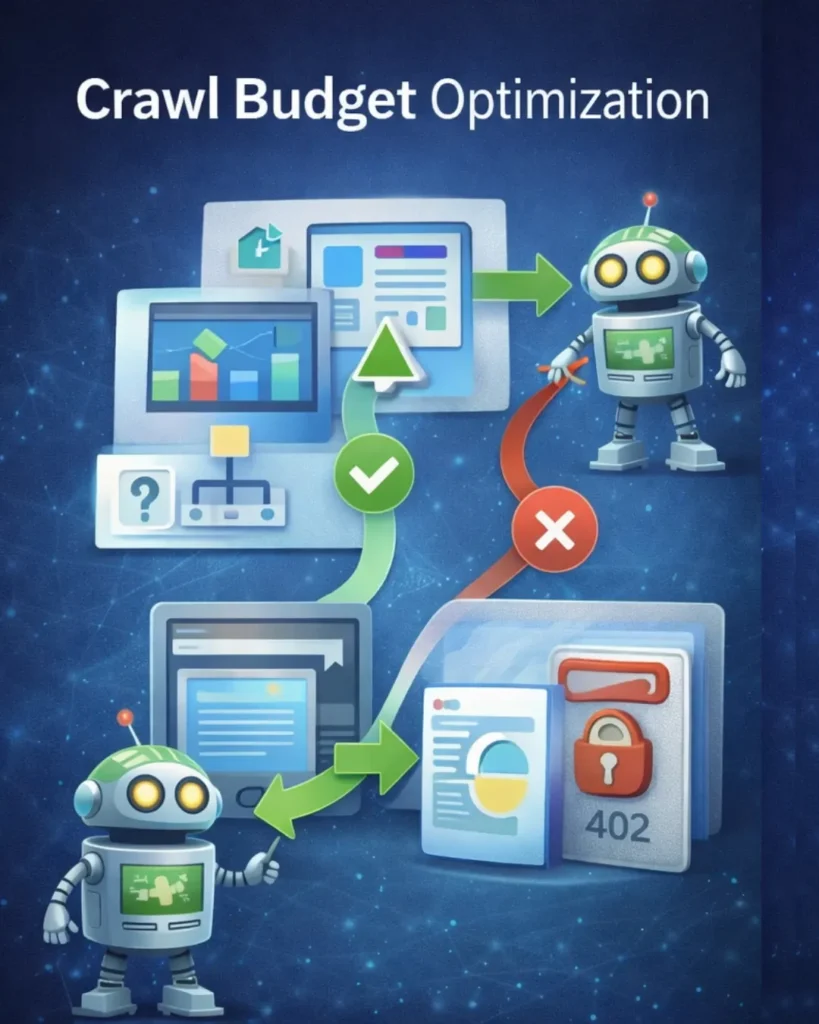

Its primary SEO role is crawl budget optimization.

Search engines do not crawl every page on your website all the time. Instead, they allocate a limited amount of crawling resources. If those resources are wasted on login pages, admin files or duplicate URLs, your important blog posts and landing pages may get crawled less frequently.

A well‑configured Robots.txt ensures that:

- Important content is crawled and indexed efficiently

- Low‑value or sensitive areas are ignored

- Server load stays optimized

- Google clearly understands your site structure

Robots.txt vs Noindex: Important Clarification

Robots.txt does not remove pages from Google search results. It only prevents crawling.

If you want to completely remove a page from search results, you must use:

noindexmeta tags- Password protection

- Search Console removal tools

Robots.txt should be used only for crawl management, not content hiding.

Why Robots.txt Is Critical for WordPress SEO

Many bloggers ignore their Robots.txt because WordPress generates a “virtual” one by default. However, a custom-optimized file offers three major benefits:

WordPress automatically generates many URLs that have little or no SEO value, such as:

/wp-admin/backend pages- Login and system files

- Internal utility files

Without guidance, search engine bots may waste time crawling these pages instead of your actual content.

Key SEO Benefits of an Optimized Robots.txt

1. Efficient Crawl Budget Usage

To maximize your crawl budget, Search engines have a limited “crawl budget” for every website—this is the amount of time and resources they spend on your site during a single visit.

If your site has thousands of useless URLs (like internal search results or backend files), the bot might leave before it ever finds your most important articles.

By blocking these low-value areas, you force the bot to spend its time on your high-quality content.

So, By blocking unnecessary backend paths, you guide bots toward:

- Blog posts

- Category pages

- Important landing pages

This improves indexing speed and consistency.

2. Better Page Rendering

Modern Google bots need access to CSS and JavaScript files to understand layout, mobile responsiveness and Core Web Vitals.

A proper Robots.txt allows required resources while blocking sensitive areas.

3. Preventing Server Overload

Aggressive crawlers and “bad bots” can sometimes slow down your website by making too many requests at once. A well-configured Robots.txt can block known malicious bots and help keep your site speed fast for real human users.

4. Optimizing for AdSense Approval

For those currently in the AdSense review process, your Robots.txt is vital. Google uses a specific crawler called Mediapartners-Google to verify your site’s quality and safety. If your file is accidentally configured to “Disallow: /“, you are effectively locking the door and telling Google they aren’t allowed inside to review your site.

If Robots.txt blocks this crawler, AdSense may fail to review your site.

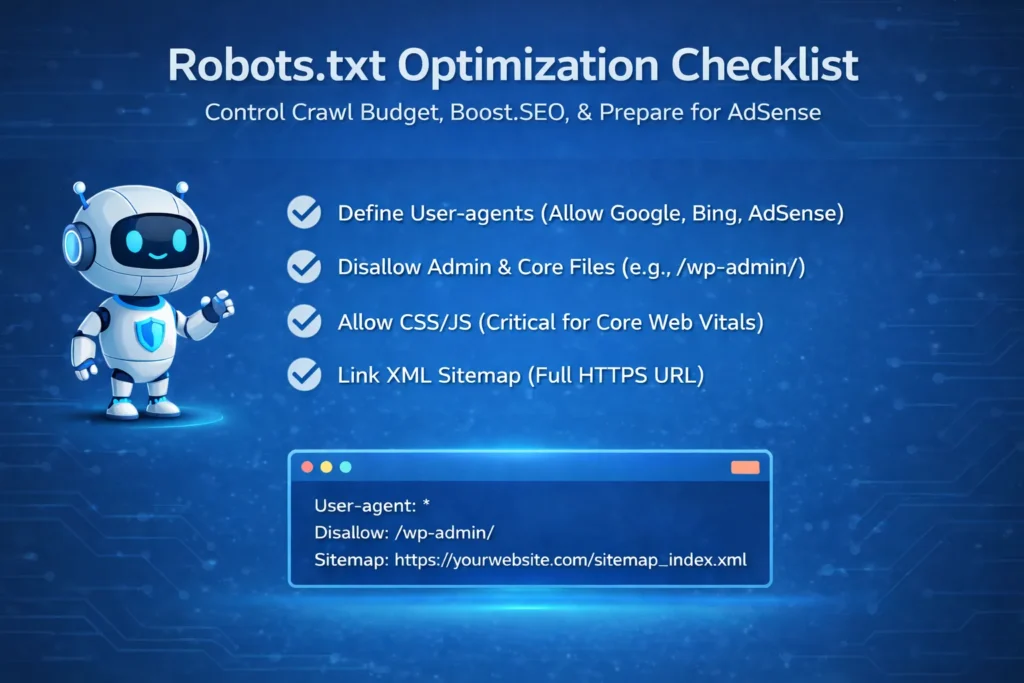

Optimizing Robots.txt for AdSense Approval

For AdSense review and long‑term compliance:

✅ What to Allow

- Mediapartners‑Google crawler

- Content pages

- Images and assets used in ads

❌ What to Avoid

Never add this rule:

User-agent: Mediapartners-Google

Disallow: /

Blocking the AdSense crawler prevents Google from evaluating your site quality.

The safest approach is not mentioning Mediapartners‑Google at all, allowing it full access.

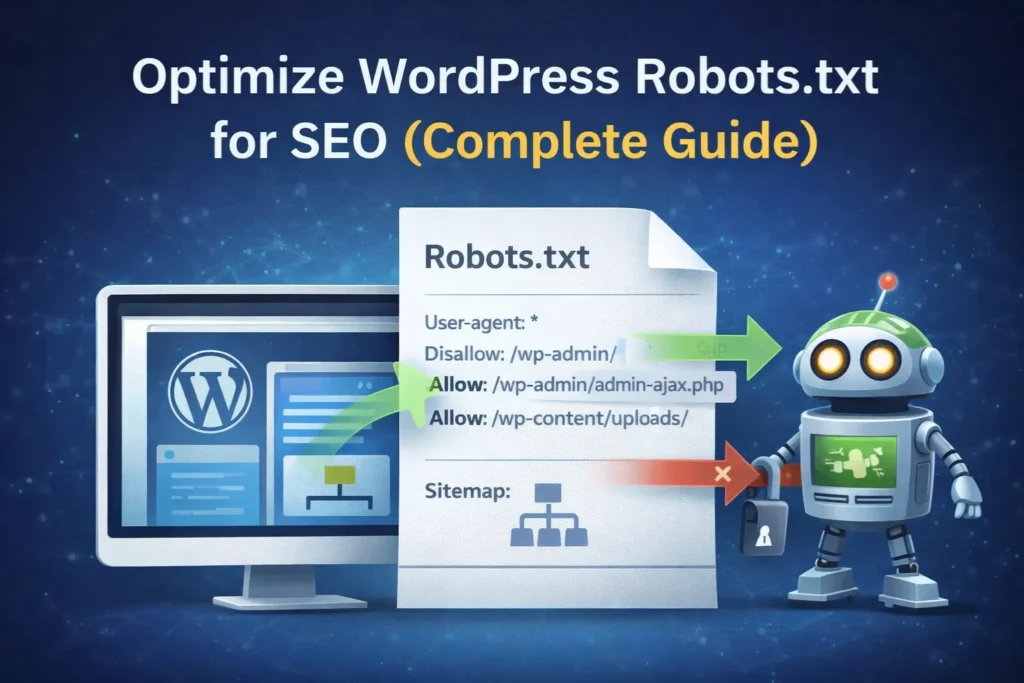

The Ideal Robots.txt File for WordPress

Below is a clean, high‑performance, evergreen Robots.txt template suitable for most WordPress sites:

User-agent: *

Disallow: /wp-admin/

Allow: /wp-admin/admin-ajax.php

Allow: /wp-content/uploads/

Disallow: /readme.html

Disallow: /refer/

Sitemap: https://yourwebsite.com/sitemap_index.xml

Explanation of Each Directive

User-agent: *→ Applies rules to all botsDisallow: /wp-admin/→ Blocks WordPress backendAllow: /wp-admin/admin-ajax.php→ Keeps dynamic site features crawlableAllow: /wp-content/uploads/→ Allows images and media indexingSitemap:→ Direct path to your XML sitemap for faster discovery

The Anatomy of a Perfect Robots.txt File

A professional Robots.txt file consists of these main parts:

- User-agent: This specifies which bot the rule applies to. Using an asterisk (

*) means the rule applies to all bots. - Disallow: This tells the bot which folders or pages it should stay away from.

- Allow: This creates exceptions. For example, you might block a whole folder but “Allow” one specific important file inside it.

- Sitemap: This provides the direct path to your XML sitemap, making it easier for Google to find your newest posts.

How to Edit Robots.txt in WordPress (Step‑by‑Step)

Method 1: Using an SEO Plugin (Recommended)

If you use Yoast SEO or All in One SEO, editing Robots.txt is simple and safe.

Method: Using the Yoast SEO File Editor

If you aren’t a developer, don’t worry. You don’t need to use complex coding or FTP. Since you are likely using Yoast SEO, the process is simple and safe.

- Log in to your WordPress Dashboard.

- Navigate to Yoast SEO in the left sidebar and click on Tools.

- Click on the File Editor link. (Note: If you don’t see this, it means your hosting has disabled file editing for security; in that case, you would use FTP).

- If you haven’t created a file yet, click the button that says “Create robots.txt file”.

- Yoast will show a text box where you can paste the code provided above.

- Click Save changes to robots.txt.

Using All in One SEO (AIOSEO)

- Go to AIOSEO → Tools → Robots.txt

- Enable custom Robots.txt

- Add directives

- Save settings

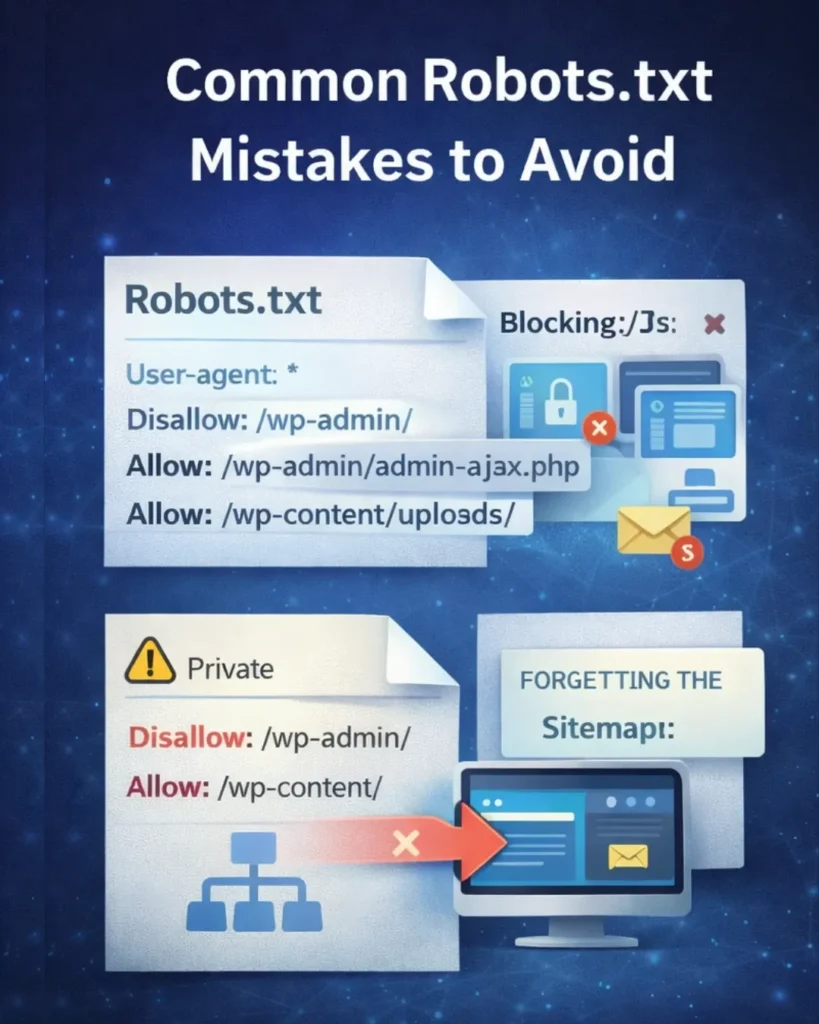

Common Robots.txt Mistakes That Hurt SEO

Even a single typo in this file can have massive consequences. Avoid these common pitfalls:

1. Blocking CSS and JavaScript Files

Older guides used to suggest blocking the /wp-includes/ folder. Do not do this. Modern Google bots need to see your CSS and JS to know your site is mobile-friendly. Blocking them can lead to a “Mobile Usability” error in Search Console.

Avoid rules like:

Disallow: /wp-content/

Disallow: /wp-includes/

Google needs these files to evaluate mobile usability and Core Web Vitals.

2. Using Robots.txt for Security

Robots.txt is publicly accessible. Never rely on it to hide private pages. Never use this file to hide “private” pages. Anyone can see your Robots.txt just by typing /robots.txt after your domain. If you have sensitive data, use password protection or a “Noindex” tag instead.

3. Forgetting the Sitemap

Always include your full sitemap URL at the very bottom. This improves crawl efficiency. It is the fastest way to get your new content indexed.

4. Case Sensitivity Errors

Robots.txt is case‑sensitive. Disallow: /Private/ will NOT block the folder /private/. Always use lowercase to match your WordPress URL structure.

How to Test Your Robots.txt File

After you publish your changes, you should verify them using Google Search Console.

- Go to the “Settings” tab in Search Console.

- Look for the “Crawl Stats” or the “Robots.txt Report”.

- Google will show you if it has successfully fetched the file and if there are any errors or warnings. If you see “0 errors,” your site is technically sound.

Frequently Asked Questions (FAQ)

1. Does Robots.txt remove a page from Google search?

No. It only stops Google from crawling the page. If another website links to that page, Google might still index it based on the link text. To completely remove a page from search results, you must use a noindex meta tag.

2. Should I block my WordPress “Category” or “Tag” pages?

In most cases, no. While some SEOs believe this saves crawl budget, category pages often help Google understand your site structure and can even rank for broad keywords.

3. How long does it take for Google to see my changes?

Google usually checks your Robots.txt file every 24 hours. If you want to speed it up, you can “submit” your updated file via the Robots.txt Tester tool in Google Search Console.

4. Can I have more than one Robots.txt file?

No. You can only have one file, and it must be located in the root directory (e.g., yoursite.com/robots.txt).

5. Is Robots.txt required for small websites?

Yes. Even small sites benefit from crawl optimization and clear bot instructions.

Conclusion: Build a Crawl‑Friendly, Authority Website

Robots.txt may be a small file, but it plays a massive role in your site’s SEO health. By guiding search engine crawlers correctly, you ensure that your best content receives the attention it deserves.

A clean, AdSense‑friendly and optimized Robots.txt signals professionalism, technical competence and trust — all essential factors for long‑term growth. Take a few minutes to review your Robots.txt today. This simple step can protect your rankings, improve crawl efficiency and strengthen your website’s foundation for years to come.

So, Mastering your Robots.txt file is a hallmark of a professional digital marketer. It is a small file that carries a big responsibility: ensuring search engines respect your server and prioritize your best content. By following the steps in this Digital Smart Guide, you are taking another step toward building a high-authority, technically perfect website.

Suggested Further Reading

- Understanding Google Search Console: Grow Your Website Traffic

- Mastering Google Analytics 4: The Essential Strategy for Growth

- How to Link GSC and GA4 for Advanced Data Insights

- Video Schema Markup: Rank on Google Video Carousel Fast

- Free Product Schema Generator Tool – (JSON-LD): Instantly Create Markup

- Rich Results Test Guide: How to Validate Schema and Fix All Errors

- Complete Guide to Google Business Profile and Local SEO Growth

- How Google Ranks Websites: The SEO Algorithm Explained

- SEO Mistakes to Avoid for New Websites (Beginner Guide)

- Word and Character Counter Tool – Count Words Instantly